Multimodal Chain-of-Thought

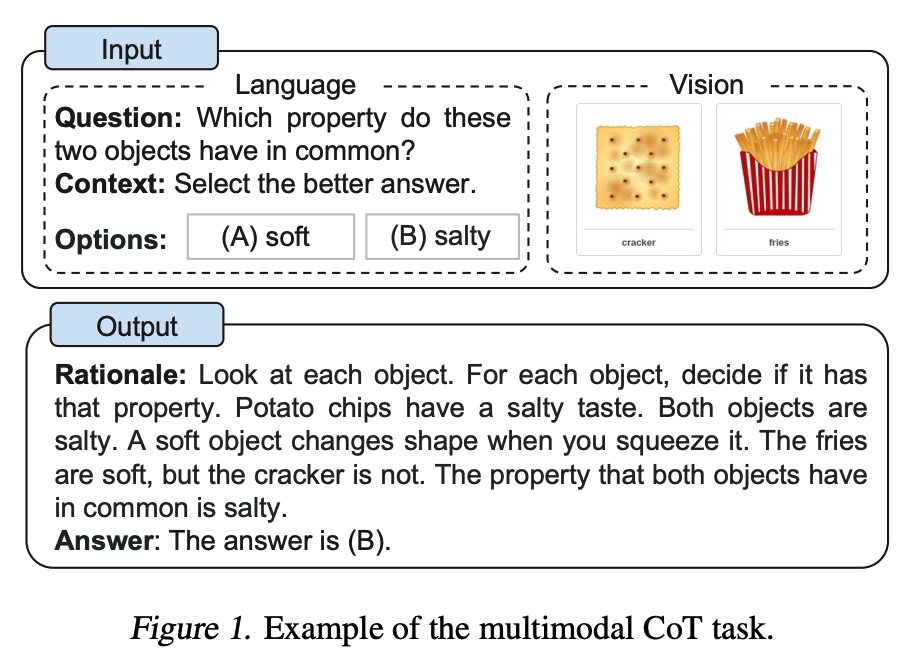

Multimodal Chain-of-Thought (MM-CoT) prompting is an advanced technique that combines visual and textual information to guide language models through step-by-step reasoning across multiple modalities. This approach enables models to leverage both image understanding and text comprehension to solve complex problems that require integrated analysis of visual and linguistic elements.

Introduced by Zhang et al. (2023), MM-CoT significantly improves performance on multimodal reasoning tasks by explicitly encouraging models to articulate their reasoning process while drawing connections between visual elements and textual context.

🎯 Core Principles

Multimodal Chain-of-Thought operates on several key principles:

The Four Pillars of MM-CoT

- Cross-Modal Integration: Seamlessly combining information from visual and textual sources

- Explicit Reasoning: Articulating step-by-step thought processes that reference both modalities

- Visual Grounding: Connecting abstract reasoning to specific visual elements

- Contextual Synthesis: Building comprehensive understanding through modal interaction

Types of Multimodal Reasoning

Visual Question Answering with Reasoning

[Image: A photograph showing a crowded train station with people wearing winter coats and carrying umbrellas]

Question: What can you infer about the weather conditions when this photo was taken?

Multimodal CoT Response:

Step 1: I observe that people in the image are wearing heavy winter coats and jackets, suggesting cold temperatures.

Step 2: Many individuals are carrying umbrellas, which indicates precipitation (rain or snow).

Step 3: The combination of winter clothing and umbrellas suggests it's likely a cold, wet day.

Step 4: The indoor setting of the train station might also indicate people are seeking shelter from harsh weather outside.

Conclusion: Based on the visual evidence of winter clothing and umbrellas, the weather was likely cold and rainy or snowy when this photo was taken.

Document Understanding and Analysis

[Image: A business report page with charts, graphs, and text sections]

Question: What are the key insights from this quarterly report, and what trends can you identify?

Multimodal CoT Response:

Step 1: I'll examine the title and headers to understand the report structure and timeframe.

Step 2: Looking at the bar chart on the left, I can see quarterly revenue data showing an upward trend from Q1 to Q3.

Step 3: The pie chart on the right shows market share distribution, with three main segments.

Step 4: The text mentions specific percentage increases, which I'll correlate with the visual data.

Step 5: Combining the textual financial data with the visual trends, I can identify growth patterns and areas of concern.

Key Insights: The report shows 15% revenue growth over three quarters, with Product A maintaining 45% market share. The upward trend in the bar chart aligns with the textual data indicating successful expansion in two key markets.

Scientific and Technical Analysis

[Image: A laboratory setup with beakers, measuring equipment, and a data readout display]

Question: Analyze this experimental setup and predict what type of experiment is being conducted.

Multimodal CoT Response:

Step 1: I observe multiple glass beakers with different colored solutions, suggesting chemical mixing or reactions.

Step 2: There's a digital scale visible, indicating precise measurement is important for this experiment.

Step 3: The data readout display shows pH readings and temperature values, suggesting monitoring of chemical properties.

Step 4: The presence of safety equipment (gloves, goggles visible in frame) indicates this involves potentially hazardous chemicals.

Step 5: The systematic arrangement and measurement tools suggest this is a controlled, quantitative experiment.

Analysis: This appears to be a chemical titration or pH analysis experiment where precise measurements and monitoring of chemical properties are crucial for determining substance concentrations or reaction endpoints.

Advanced MM-CoT Techniques

Multi-Step Visual Problem Solving

[Image: A complex architectural blueprint with measurements, annotations, and multiple views]

Question: Calculate the total square footage of the main living area and identify any potential design issues.

Multimodal CoT Response:

Step 1: I'll identify the main living area boundaries by examining the floor plan layout and room labels.

Step 2: From the blueprint, I can see the living room dimensions marked as 20' × 15' and the adjacent dining area as 12' × 14'.

Step 3: I'll calculate each area: Living room = 20 × 15 = 300 sq ft; Dining area = 12 × 14 = 168 sq ft.

Step 4: Checking the blueprint annotations, these areas appear to be connected as one open space.

Step 5: Total main living area = 300 + 168 = 468 square feet.

Step 6: Looking for design issues: I notice the kitchen entrance creates a narrow walkway (marked as 3' wide) which might create traffic flow problems.

Step 7: The window placement on the north wall might limit furniture arrangement options.

Result: Total main living area is 468 sq ft. Potential issues include the narrow kitchen entrance passage and limited furniture placement due to north-facing window positioning.

Comparative Visual Analysis

[Images: Two similar charts showing company performance data for 2022 and 2023]

Question: Compare these annual performance charts and identify the most significant changes.

Multimodal CoT Response:

Step 1: I'll examine the chart structures to ensure I'm comparing equivalent metrics between the two years.

Step 2: Looking at the revenue bars, 2023 shows approximately 25% higher values than 2022 across all quarters.

Step 3: The profit margin line graph shows 2022 had more volatility, while 2023 demonstrates steadier growth.

Step 4: In the 2023 chart, I notice a new product category wasn't present in 2022's data.

Step 5: The color coding shows different market segments, and the blue segment (international sales) appears significantly larger in 2023.

Step 6: The expense breakdown pie charts show a shift toward higher R&D spending in 2023 (18% vs 12% in 2022).

Key Changes: Revenue increased 25%, profit margins stabilized, international sales expanded significantly, and R&D investment increased from 12% to 18% of total expenses, indicating strategic growth focus.

Spatial and Temporal Reasoning

[Image: A time-lapse satellite image showing urban development over several years]

Question: Analyze the urban growth patterns and predict future development trends.

Multimodal CoT Response:

Step 1: I'll examine the earliest timeframe image to establish the baseline urban footprint.

Step 2: Tracking the progression through subsequent time periods, I can see development expanding primarily toward the southeast.

Step 3: The expansion pattern follows major transportation routes visible in the imagery.

Step 4: Green spaces (parks) remain largely unchanged, suggesting zoning protection.

Step 5: The rate of expansion appears to accelerate in the later time periods based on the density of new development.

Step 6: Industrial areas (rectangular buildings) are concentrating in the northern section.

Step 7: Residential development (smaller, scattered buildings) follows transportation corridors.

Prediction: Future development will likely continue southeastern expansion along transportation routes, with industrial development concentrating north and residential growth following corridor patterns while respecting protected green spaces.

Implementation Strategies

Structured Visual Analysis Framework

Framework for consistent multimodal reasoning:

1. **Visual Inventory**: Systematically catalog all visual elements

2. **Text Integration**: Connect textual information with visual observations

3. **Cross-Modal Connections**: Identify relationships between visual and textual elements

4. **Progressive Analysis**: Build understanding step by step

5. **Synthesis**: Combine insights from all modalities for final conclusions

Example Application:

[Image: Medical X-ray with patient report text]

Step 1 (Visual Inventory): I see a chest X-ray showing lung fields, heart silhouette, and rib cage.

Step 2 (Text Integration): The report mentions patient symptoms of chest pain and shortness of breath.

Step 3 (Cross-Modal Connections): The symptoms align with visual indicators I should examine.

Step 4 (Progressive Analysis): I'll systematically examine lung clarity, heart size, and any abnormalities.

Step 5 (Synthesis): Combining visual findings with reported symptoms to form assessment.

Error Prevention in Multimodal Reasoning

Common pitfalls and prevention strategies:

1. **Visual Misinterpretation**:

Prevention: Describe what you see before interpreting meaning

2. **Modal Isolation**:

Prevention: Explicitly connect visual and textual elements

3. **Assumption Errors**:

Prevention: Distinguish between direct observations and inferences

4. **Context Neglect**:

Prevention: Consider the broader context of both visual and textual information

Example with Error Prevention:

[Image: Graph showing declining trend line]

Incorrect: "This shows the company is failing."

Correct: "I observe a declining trend line in this graph. To properly interpret this, I need to consider: what metric is being measured (from the axis labels), the time period (from the x-axis), and any accompanying text that provides context about what this decline represents."

Domain-Specific Applications

Educational Content Analysis

[Image: Textbook page with diagrams, formulas, and explanatory text]

Question: Explain the concept being taught and how the visual elements support understanding.

Educational MM-CoT:

Step 1: I identify this as a physics page about wave motion based on the chapter heading.

Step 2: The diagram shows sinusoidal waves with labeled wavelength and amplitude markers.

Step 3: The mathematical formulas relate wave speed to frequency and wavelength (v = fλ).

Step 4: The text explains how wave properties affect real-world phenomena like sound and light.

Step 5: The visual elements reinforce the mathematical relationships by showing measurable wave characteristics.

Explanation: This page teaches wave physics by combining visual wave diagrams with mathematical formulas and practical examples, helping students understand abstract concepts through multiple learning modalities.

Medical Imaging Analysis

[Image: Medical scan with accompanying patient history and symptoms]

Medical MM-CoT Reasoning:

Step 1: Review patient history and presenting symptoms from the text.

Step 2: Systematically examine the imaging for relevant anatomical structures.

Step 3: Identify any abnormalities or areas of concern in the visual data.

Step 4: Correlate imaging findings with clinical symptoms.

Step 5: Consider differential diagnoses based on combined evidence.

Step 6: Recommend additional testing or follow-up based on integrated analysis.

Business Intelligence and Analytics

[Image: Dashboard with multiple charts, KPIs, and trend indicators]

Business MM-CoT Analysis:

Step 1: Identify key performance indicators displayed in the dashboard.

Step 2: Analyze trend directions in time-series charts.

Step 3: Examine comparative metrics across different business units or time periods.

Step 4: Connect quantitative visual data with qualitative business context.

Step 5: Identify correlations between different metrics shown.

Step 6: Synthesize insights for strategic decision-making recommendations.

Best Practices for MM-CoT Implementation

Visual Attention and Description

- Systematic Scanning: Develop consistent patterns for examining visual information

- Descriptive Clarity: Describe visual elements before interpreting them

- Detail Hierarchy: Start with major elements, then focus on relevant details

- Spatial Awareness: Consider spatial relationships and layout significance

Cross-Modal Integration

- Explicit Connections: Clearly state how visual and textual information relate

- Evidence Triangulation: Use multiple sources to support conclusions

- Contradiction Resolution: Address conflicts between visual and textual information

- Context Preservation: Maintain awareness of the broader context throughout analysis

Quality Assurance

Self-Checking Framework:

1. Did I accurately describe what I see in the image?

2. Have I properly integrated textual information?

3. Are my connections between modalities logical and well-supported?

4. Have I distinguished between observations and inferences?

5. Does my final conclusion synthesize all available evidence?

Limitations and Considerations

Technical Limitations

- Image Resolution: Poor quality images can limit analysis accuracy

- Model Capabilities: Not all language models can process visual information effectively

- Context Windows: Large images and extensive text may exceed processing limits

- Modality Alignment: Ensuring visual and textual information are properly synchronized

Cognitive Challenges

- Attention Management: Balancing focus between visual and textual elements

- Bias Prevention: Avoiding overreliance on one modality

- Complexity Scaling: Managing reasoning chains as problem complexity increases

- Error Propagation: Mistakes in early steps can compound through the reasoning chain

Future Developments

Enhanced Integration Techniques

Research continues into:

- Dynamic Attention: Adaptively focusing on relevant visual regions based on reasoning needs

- Multi-Scale Analysis: Reasoning across different levels of visual detail

- Temporal Sequences: Extending MM-CoT to video and time-series visual data

- Interactive Reasoning: Enabling back-and-forth refinement between visual and textual analysis

Tool Integration

- Automated Visual Analysis: Integrating computer vision tools to augment human-interpretable reasoning

- Cross-Modal Search: Using reasoning outcomes to guide additional information retrieval

- Collaborative Reasoning: Combining multiple models or agents for complex multimodal problems

References

- Zhang, Z., et al. (2023). Multimodal Chain-of-Thought Reasoning in Language Models. arXiv preprint arXiv:2302.00923

- Li, J., et al. (2022). BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. ICML 2022

- Lu, J., et al. (2022). Unified-IO: A Unified Model for Vision, Language, and Multi-Modal Tasks. arXiv preprint arXiv:2206.08916

- Alayrac, J., et al. (2022). Flamingo: a Visual Language Model for Few-Shot Learning. NeurIPS 2022